Interpretable and Uncertainty-Aware Multi-Modal Spatio-Temporal Deep Learning Framework for Regional Economic Forecasting

Downloads

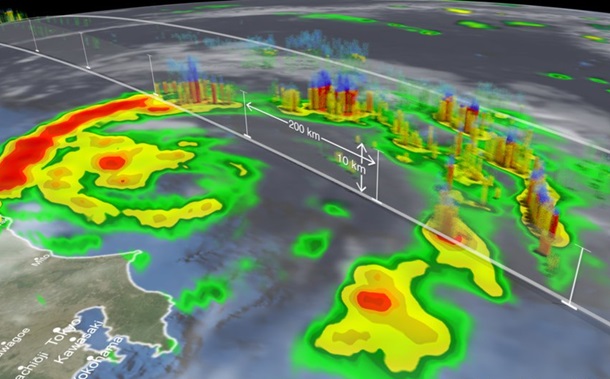

The objective of this study is to improve the accuracy, interpretability, and reliability of regional economic forecasting, a task essential for effective policy-making, infrastructure planning, and crisis management. Existing econometric and machine learning models often suffer from linear assumptions, limited use of heterogeneous data, and a lack of transparent uncertainty quantification. To address these limitations, we propose a unified multi-modal spatio-temporal deep learning framework that integrates satellite imagery, structured economic indicators, and policy documents through an adaptive cross-modal attention mechanism. The methodology incorporates a spatio-temporal cross-attention module to capture dynamic inter-regional dependencies and temporal patterns, along with a Bayesian neural prediction head to quantify uncertainty. Applied to a 13-year dataset from 75 Chinese cities, the model demonstrates substantial improvements, reducing mean absolute error by 37% compared to XGBoost and achieving 92% PICP (Prediction Interval Coverage Probability) under a 90% confidence threshold. Case studies further validate its ability to trace pandemic-induced economic shocks and reveal latent propagation pathways. The novelty of this work lies in its integrative architecture that jointly advances multi-modal fusion, interpretability, and uncertainty quantification, offering both methodological innovation and practical utility. This framework provides policymakers with transparent, risk-aware predictions and establishes a scalable foundation for next-generation economic forecasting.

Downloads

[1] Cressie, N., & Wikle, C. K. (2011). Statistics for spatio-temporal data. John Wiley & Sons, New Jersey, United States.

[2] Ospina, R., Gondim, J. A. M., Leiva, V., & Castro, C. (2023). An Overview of Forecast Analysis with ARIMA Models during the COVID-19 Pandemic: Methodology and Case Study in Brazil. Mathematics, 11(14), 3069. doi:10.3390/math11143069.

[3] Mutele, L., & Carranza, E. J. M. (2024). Statistical analysis of gold production in South Africa using ARIMA, VAR and ARNN modelling techniques: Extrapolating future gold production, Resources–Reserves depletion, and Implication on South Africa’s gold exploration. Resources Policy, 93. doi:10.1016/j.resourpol.2024.105076.

[4] Chavleishvili, S., Kremer, M., & Lund-Thomsen, F. (2023). Quantifying financial stability trade-offs for monetary policy: a quantile VAR approach. ECB Working Paper, 2833.

[5] Xie, Z., Yang, Y., Zhang, Y., Wang, J., & Du, S. (2023). Deep learning on multi-view sequential data: a survey. Artificial Intelligence Review, 56(7), 6661–6704. doi:10.1007/s10462-022-10332-z.

[6] Kalisetty, S., & Lakkarasu, P. (2024). Deep Learning Frameworks for Multi-Modal Data Fusion in Retail Supply Chains: Enhancing Forecast Accuracy and Agility. American Journal of Analytics and Artificial Intelligence, 1(1), 1-15.

[7] Kpereobong Friday, I., Prasanna Pati, S., & Mishra, D. (2025). A Multi-Modal Approach Using a Hybrid Vision Transformer and Temporal Fusion Transformer Model for Stock Price Movement Classification. IEEE Access, 13, 127221–127239. doi:10.1109/ACCESS.2025.3589063.

[8] Zhang, C., Sjarif, N. N. A., & Ibrahim, R. (2024). Deep learning models for price forecasting of financial time series: A review of recent advancements: 2020–2022. Wiley Interdisciplinary Reviews: Data Mining and Knowledge Discovery, 14(1), 1519. doi:10.1002/widm.1519.

[9] Motie, S., & Raahemi, B. (2024). Financial fraud detection using graph neural networks: A systematic review. Expert Systems with Applications, 240. doi:10.1016/j.eswa.2023.122156.

[10] Zhao, F., Zhang, C., & Geng, B. (2024). Deep Multimodal Data Fusion. ACM Computing Surveys, 56(9), 1–36. doi:10.1145/3649447.

[11] Nwafor, C. N., Nwafor, O., & Brahma, S. (2024). Enhancing transparency and fairness in automated credit decisions: an explainable novel hybrid machine learning approach. Scientific Reports, 14(1), 25174. doi:10.1038/s41598-024-75026-8.

[12] Wang, D., & Zhou, Y. X. (2024). An innovative machine learning workflow to research China’s systemic financial crisis with SHAP value and Shapley regression. Financial Innovation, 10(1), 103. doi:10.1186/s40854-023-00574-3.

[13] Krüger, F., & Plett, H. (2024). Prediction Intervals for Economic Fixed-Event Forecasts. Annals of Applied Statistics, 18(3), 2635–2655. doi:10.1214/24-AOAS1900.

[14] Sahu, S. K., Mokhade, A., & Bokde, N. D. (2023). An Overview of Machine Learning, Deep Learning, and Reinforcement Learning-Based Techniques in Quantitative Finance: Recent Progress and Challenges. Applied Sciences (Switzerland), 13(3), 1956. doi:10.3390/app13031956.

[15] Paramesha, M., Rane, N., & Rane, J. (2024). Artificial Intelligence, Machine Learning, Deep Learning, and Blockchain in Financial and Banking Services: A Comprehensive Review, Partners Universal Multidisciplinary Research Journal (PUMRJ), 1(2), 63-83. doi:10.5281/zenodo.12826933.

[16] Yu, Q., Yang, G., Wang, X., Shi, Y., Feng, Y., & Liu, A. (2025). A review of time series forecasting and spatio-temporal series forecasting in deep learning. Journal of Supercomputing, 81(10), 1–48. doi:10.1007/s11227-025-07632-w.

[17] Bourday, R., Aatouchi, I., Kerroum, M. A., & Zaaouat, A. (2024). Cryptocurrency Forecasting Using Deep Learning Models: A Comparative Analysis. HighTech and Innovation Journal, 5(4), 1055–1067. doi:10.28991/HIJ-2024-05-04-013.

[18] Mroua, M., & Lamine, A. (2022). Financial Time Series Prediction under COVID-19 Pandemic Crisis with Long Short-Term Memory (LSTM) Network. SSRN Electronic Journal, 10(1), 1–15. doi:10.2139/ssrn.4242252.

[19] Kesharwani, A., & Shukla, P. (2024). FFDM - GNN: A Financial Fraud Detection Model using Graph Neural Network. International Conference on Computing, Sciences and Communications, 1–6. doi:10.1109/ICCSC62048.2024.10830438.

[20] Qin, X. (2025). Application of Deep Learning for Stock Prediction within the Framework of Portfolio Optimization in Quantitative Trading. HighTech and Innovation Journal, 6(2), 598–614. doi:10.28991/HIJ-2025-06-02-016.

[21] Ruiz-López, J. S., & Jiménez-Carrión, M. (2025). Closing Price Prediction of Cryptocurrencies BTC, LTC, and ETH Using a Hybrid ARIMA-LSTM Algorithm. HighTech and Innovation Journal, 6(2), 487–500. doi:10.28991/HIJ-2025-06-02-09.

[22] Guo, H. (2025). IASB Framework: Construction of Data Asset Accounting System Based on PO-BP Model. HighTech and Innovation Journal, 6(2), 615–631. doi:10.28991/HIJ-2025-06-02-017.

[23] Ruangkanjanases, A., & Hariguna, T. (2025). Examining User Satisfaction and Continuous Usage Intention of Digital Financial Advisory Platforms. HighTech and Innovation Journal, 6(1), 216–235. doi:10.28991/HIJ-2025-06-01-015.

[24] Sreeraj, P., Balaji, B., Paul, A., & Francis, P. A. (2024). A probabilistic forecast for multi-year ENSO using Bayesian convolutional neural network. Environmental Research Letters, 19(12), 124023. doi:10.1088/1748-9326/ad8be1.

[25] Cohen, Y., Biton, A., & Shoval, S. (2025). Fusion of Computer Vision and AI in Collaborative Robotics: A Review and Future Prospects. Applied Sciences (Switzerland), 15(14), 7905. doi:10.3390/app15147905.

[26] Al-Karkhi, M. I., & Rza̧dkowski, G. (2025). Innovative machine learning approaches for complexity in economic forecasting and SME growth: A comprehensive review. Journal of Economy and Technology, 3, 109–122. doi:10.1016/j.ject.2025.01.001.

[27] Naeem, A., Anees, T., Ahmed, K. T., Naqvi, R. A., Ahmad, S., & Whangbo, T. (2023). Deep learned vectors’ formation using auto-correlation, scaling, and derivations with CNN for complex and huge image retrieval. Complex and Intelligent Systems, 9(2), 1729–1751. doi:10.1007/s40747-022-00866-8.

[28] Widiputra, H., Mailangkay, A., & Gautama, E. (2021). Multivariate CNN-LSTM Model for Multiple Parallel Financial Time-Series Prediction. Complexity, 9903518. doi:10.1155/2021/9903518.

[29] Mir, M. N. H., Bhuiyan, M. S. M., Rafi, M. Al, Rodrigues, G. N., Mridha, M. F., Hamid, M. A., Monowar, M. M., & Uddin, M. Z. (2025). Joint Topic-Emotion Modeling in Financial Texts: A Novel Approach to Investor Sentiment and Market Trends. IEEE Access, 13, 28664–28677. doi:10.1109/ACCESS.2025.3538760.

[30] Zhou, Q., Li, H., Meng, W., Dai, H., Zhou, T., & Zheng, G. (2025). Fusion of Multimodal Spatio-Temporal Features and 3D Deformable Convolution Based on Sign Language Recognition in Sensor Networks. Sensors, 25(14), 4378. doi:10.3390/s25144378.

- This work (including HTML and PDF Files) is licensed under a Creative Commons Attribution 4.0 International License.